Fancy viewfinder tricks, hot pixels, sky processing and more.

After Google launched its wildly successful Night Sight mode on the Pixel 3, its developers set their sights on an even tougher low-light goal for the Pixel 4: Astrophotography. It’s been such a popular feature that Google has released a blog post explaining how it works for that chore. In a nutshell, letting non-experts shoot the stars requires a big dose of AI help.

The trick to capturing the night sky is taking long exposures. As such, Google bumped the Pixel 4’s maximum exposure time to four minutes, up from just one minute on the Pixel 3. However, shooting a single four minute exposure would blur the stars, so the Pixel 4 takes up to 15 exposures, each no longer than 16 seconds. That makes the stars look like points of light, rather than streaks, but it introduces a host of other problems that must be solved.

For instance, any image sensor has defective “hot” or warm pixels that are invisible for normal shots, but appear as tiny dots during long exposures. To get rid of those, the Pixel 4 looking for bright outlying pixels, then hides them “by replacing their value with the average of their neighbors,” Google said. That results in a loss of image information, but “does not noticeably affect image quality.”

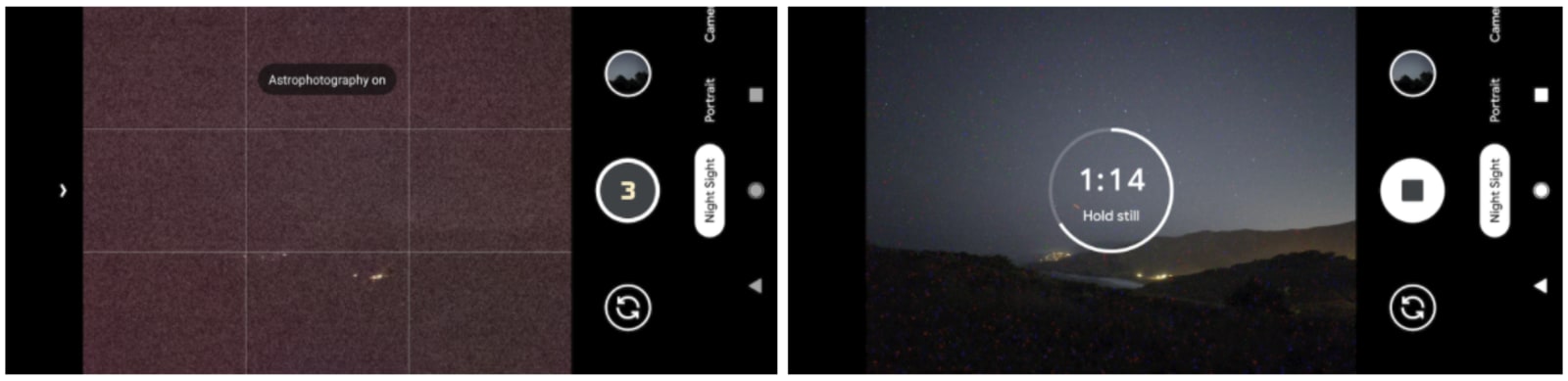

Another issue is that a smartphone’s display can’t show night sky detail, making composition a challenge. When you start taking a photo, the Pixel 4 displays each of the 15 maximum exposures as they’re captured (above), giving you a more detailed look. If you don’t like what you see, you can just move your phone, and it’ll restart the process again. It does a similar process for autofocus, capturing two one-second exposures that help focus the lens. If it’s too dark even for that, the lens simply focuses to infinity.

With multiple night exposures, the sky can appear too bright, especially during a full moon. The Pixel 4’s AI can detect which part of the image is sky, then dim that part to make the image more natural. “Sky detection also makes it possible to perform sky-specific noise reduction, and to selectively increase contrast to make features like clouds, color gradients, or the Milky Way more prominent.,” Google said.

Just bear in mind that you can’t can’t capture stars and the moon at the same time, as the moon’s brightness overpowers the stars. At the same time, when you capture a starry sky with no moon, the landscape will only appear as a silhouette. Still, Google showed off some of the results (below), which are incredibly impressive for a smartphone.