Real acting, virtual humans

With character-driven games, facial animation is more important than ever – and modern games need vast amounts of it. The scripts for some of this year’s AAA releases span thousands of pages (compared to just 120 for an average Hollywood movie), and every line needs to be matched with a believable performance to keep the player immersed.

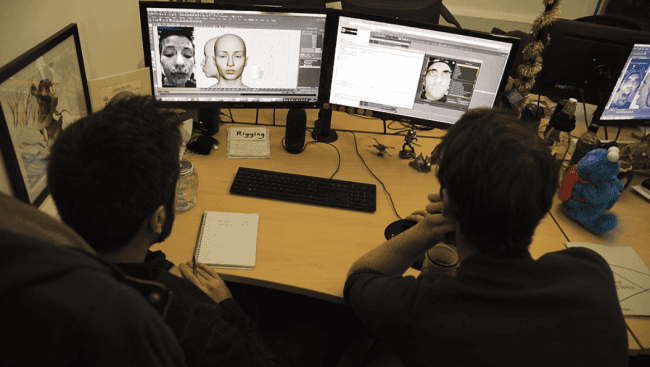

“We’re moving away from a world where facial animation is a jaw flapping,” says Steve Caulkin, chief technology officer of Cubic Motion, a company that specializes in capturing facial performances and turning them into animated characters in real time.

Instant VFX

Cubic Motion, which is based in Manchester, UK, has worked with developers including Activision, Epic, EA, Ninja Theory and Sony to create lifelike animations for stories that are increasingly deep and a character-driven.

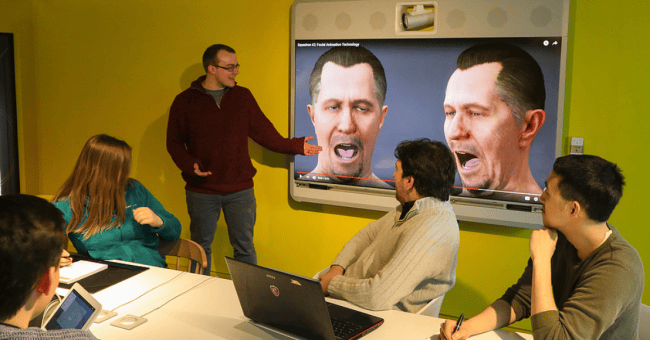

Real-time animations based on live performances give game directors a good idea of what the finished animation will look like before entering into the full VFX process – much like the SIMULCAM virtual camera used by movie directors (like James Cameron on Avatar and Brian Singer on X-Man: Days of Future Past) to preview VFX-heavy scenes on-set.

The directors can then use that immediate feedback to help guide the actors. “It enables the director to visualize, review and play back,” says Caulkin, “bringing the visual effects into the filmic.”

Not only does that give the game makers more control, it also enables them to work faster and process a huge volume of footage. Instead of doing one take, they can do eight and see how they all look immediately.

Cubic Motion demonstrated the process at GDC 2018, the world’s biggest game development conference, with a live demonstration of Siren – a collaborative project with Epic games, 3lateral, Vicon and Tencent.

Siren is a ‘digital human’ created by an international team of artists and engineers, and was ‘driven’ live by a human on stage – recreating an actress’s expressions and movements in real-time.

Scaling up

Cubic Motion currently offers its technology and skills as a service to game developers and other clients, but it’ll soon be licensing a product as well. The challenge, Caulkin says, is scaling up the team’s technical and artistic expertise.

“It’s a meeting of two worlds, and we want to provide some semblance of that,” he says. “We have an R&D team who are experts in things like locating objects, and measuring where the pupil is in an eye. But if I’m a character and I smile, and the monster smiles, it’s an artistic decision. We’re trying to get that experience into the tech.”

Ultimately, Cubic Motion would like to be able to provide a package to clients containing a motion capture headset, software and support that can be used straight out of the box. That’s still some way off, but the company is working closely with clients to get the process started.

“We’ve done this several times now, and each time we’ve done things more smoothly,” says Caulkin. “We want to make it accurate, robust and repeatable.”

Siri, in the flesh

Video game animation makes up the bulk of Cubic Motion’s work – largely due to the differences between game and film production – but the company sees lots of potential for its technology in other areas, including online language lessons and live support.

“It allows you to do real-time interaction with digital characters – say for conferencing or chat,” says Caulkin. “Then we start to get into the realities of interactive digital characters. Something like Siri or Alexa that is able to have a virtual representation.”

Digital assistants are making huge strides in terms of AI, voice recognition and speech synthesis, but a realistic human face could be the factor that makes them feel truly ‘smart’.