Hello from the other side.

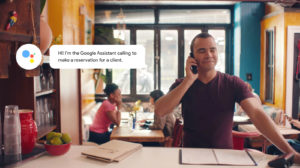

When Google unveiled the Duplex phone-calling reservation AI at I/O last month, the world was shook. Despite the potential convenience it presented, the system’s ability to mimic human inflections in conversation was uncanny and borderline-creepy. Back then, we only heard recordings of what Assistant could do with Duplex technology. At a recent demo in New York, though, I got a chance to chat with the real thing, playing the role of a restaurant staffer on the call.

More people will be taking calls made by Google Assistant soon, as the company begins public tests of the Duplex technology. Only a select group of users and restaurants across the US will be involved in the initial wave, though. It might be awhile before you can punch in a few parameters (number of people, time range) and have Assistant book reservations on the phone for you.

If you’re one of the lucky few, you might find the entire experience a little surreal, as I did. To be fair, most testers won’t be in the same situation I was — in a relatively quiet restaurant on the Upper East Side of Manhattan, carefully watched by an audience of about five people (including fellow tech reporters and Google’s product lead and engineers). I felt a little self-conscious as I picked up the phone and spoke with Assistant, but I think the AI should have been more worried.

“Hello, this is Thep Thai restaurant,” I yelled down the phone. I knew in advance it’d be Assistant on the line, thanks to the caller ID and the fact that this was a demo setup. To be clear, this was the real Duplex system we were invited to test, and we weren’t talking with prerecorded clips. It was altogether possible to trip up the system by being unintelligible or even unreasonable, and we were given free rein to confuse Assistant. So I tried to come up with common scenarios I could see being problematic.

After it identified itself and informed me the call would be recorded, Assistant asked, in a friendly male voice, to make a reservation for Friday, June 30th. “Sure, for how many people?” I asked.

“For four?” The Assistant replied with an upward inflection.

“I’m sorry, we don’t take reservations for parties smaller than five,” I immediately shut Assistant down. After all, if I did indeed run a restaurant in New York, it would certainly be an exclusive establishment. Plus, I’d been on the receiving end of such requirements many times.

“Oh, OK. What’s the wait time like then?”

I was surprised by the follow-up. I was expecting Assistant to simply give up, but like a meticulous helper, it knew to ask for more information.

“It’s about an hour’s wait, we’re really busy on Fridays,” I riffed.

“OK, thank you,” the Assistant said. I had one last chance to throw Duplex off, so I went for it.

“Just so you know, on Fridays, we have an all-day brunch menu, so come prepared to eat just breakfast foods,” I quickly tacked on.

No response.

Assistant was either very confused or gone. I hung up, feeling slightly relieved that I had survived, but also exhilarated at having possibly tripped up the AI.

Duplex will fallback to a human operator when Assistant is overwhelmed like it did on another reporter’s call. He used a fake thick accent and claimed to be a fictitious pizza parlor, then asked if the diner would be ordering the tiramisu because that’s a special order that needs advanced preparation. Assistant gave up trying to understand the request and excused itself, saying it had to call in its manager. A human operator took over and even he had trouble understanding the reporter’s question.

In these cases, Assistant is designed to “gracefully bow out,” said Scott Huffman, Google’s vice president of engineering. It will politely excuse itself before asking for human help while reassuring the other person that there will be follow-up. This politeness is a huge part of what makes Duplex so realistic and thus, so creepy.

Huffman and team found that it was important for Assistant to be convincing, or businesses wouldn’t take it seriously. “It didn’t work if it didn’t sound real,” Huffman said.

To that end, Google stuffed a bunch of speech disfluencies (think: ums and ahs) into Duplex, not just to make Assistant sound more human, but also to facilitate smoother, more polite conversations. If the restaurant tries to confirm a reservation for the wrong number of people, for instance, Assistant will gently correct them instead of flatly saying no. “Um, actually, it’s for five” instead of “No, five people” sounds less antagonistic.

I’m not sure we really need AI to be polite for such systems to be effective. After all, when I was on the phone with Assistant, I knew right away it was a robot because it identified itself at the start. It was actually weirder to reconcile the realistic voice with my knowledge that it wasn’t an actual human. Sometimes, Assistant would lapse into a robotic cadence, like when it was reciting a phone number, which was also a little odd.

I understand the desire for human decency in dealing with a disembodied voice assistant: We don’t want to be jerks (most of us, anyway). I also get that Duplex is probably more effective if it sounds human, but because it identifies itself as the Assistant at the start of the call, there’s a bit of a disconnect. I knew from the get-go that I was talking to a robot, so the uncanny human-sounding voice was just distracting. I don’t think Google should do away with the disclaimer; I just wonder: When both parties on the call are aware that one of them is a robot, do we need to keep up the polite pretense? Can we get down to business and do away with the weirdness that is this enforced politeness?

Huffman sees Duplex as an evolution of voice technology that’s existed for years in things like automated phone operators for banks or airlines. While that’s true, and technically we shouldn’t feel any stranger about Duplex than we do about those systems, I still can’t shake the feeling that we need to tread carefully. Google is limiting the use of this technology for now and is taking pains to reassure us that it isn’t sinister. I fully understand Duplex’s potential benefits and marvel at how sophisticated and effective it is. But I don’t need AI to take on traits like politeness; leave human decency to us humans.

This article originally appeared on Engadget.