Microsoft’s HoloLens has an impressive ability to quickly sense its surroundings, but limiting it to displaying emails or game characters on them would show a lack of creativity. New research shows that it works quite well as a visual prosthesis for the vision impaired, not relaying actual visual data but guiding them in real time with audio cues and instructions.

The researchers, from CalTech and University of Southern California, first argue that restoring vision is at present simply not a realistic goal, but that replacing the perception portion of vision isn’t necessary to replicate the practical portion. After all, if you can tell where a chair is, you don’t need to see it to avoid it, right?

Crunching visual data and producing a map of high-level features like walls, obstacles, and doors is one of the core capabilities of the HoloLens, so the team decided to to let it do its thing and recreate the environment for the user from these extracted features.

They designed the system around sound, naturally. Every major object and feature can tell the user where it is, either via voice or sound. Walls, for instance, hiss (presumably a white noise, not a snake hiss) as the user approaches them. And the user can scan the scene, with objects announcing themselves from left to right from the direction in which they are located. A single object can be selected and will repeat its callout to help the user find it.

That’s all well for stationary tasks like finding your cane or the couch in a friend’s house. But the system also works in motion.

The team recruited seven blind people to test it out. They were given a brief intro but no training, and then asked to accomplish a variety of tasks. The users could reliably locate and point to objects from audio cues, and were able to find a chair in a room in a fraction of the time they normally would, and avoid obstacles easily as well.

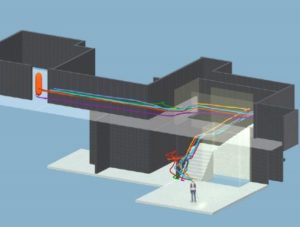

This render shows the actual paths taken by the users in the navigation tests.

Then they were tasked with navigating from the entrance of a building to a room on the second floor by following the headset’s instructions. A “virtual guide” repeatedly says “follow me” from an apparent distance of a few feet ahead, while also warning when stairs were coming, where handrails were, and when the user had gone off course.

All seven users got to their destinations on the first try, and much more quickly than if they had had to proceed normally with no navigation. One subject, the paper notes, said “That was fun! When can I get one?”

Microsoft actually looked into something like this years ago, but the hardware just wasn’t there — HoloLens changes that. Even though it is clearly intended for use by sighted people, its capabilities naturally fill the requirements for a visual prosthesis like the one described here.

Interestingly, the researchers point out that this type of system was also predicted more than 30 years ago, long before they were even close to possible:

“I strongly believe that we should take a more sophisticated approach, utilizing the power of artificial intelligence for processing large amounts of detailed visual information in order to substitute for the missing functions of the eye and much of the visual pre-processing performed by the brain,” wrote the clearly far-sighted C.C. Collins way back in 1985.

The potential for a system like this is huge, but this is just a prototype. As systems like HoloLens get lighter and more powerful, they’ll go from lab-bound oddities to everyday items — one can imagine the front desk at a hotel or mall stocking a few to give to vision-impaired folks who need to find their room or a certain store.

“By this point we expect that the reader already has proposals in mind for enhancing the cognitive prosthesis,” they write. “A hardware/software platform is now available to rapidly implement those ideas and test them with human subjects. We hope that this will inspire developments to enhance perception for both blind and sighted people, using augmented auditory reality to communicate things that we cannot see.”

This article originally appeared on TechCrunch.