A startup with ties to Amazon is sampling a 16-nm chip mainly targeted for data centers that it claims handily beats CPUs and GPUs for deep-learning inference jobs. Habana is raising funds to support its production and a roadmap that includes a 16-nm training chip sampling next year as well as follow-on 7-nm products.

The startup is the latest to join a frothy AI sector of as many as 50 companies with some form of machine-learning accelerator. To date, big data centers driving the technology typically run their workloads on the large banks of CPUs and GPUs that they maintain.

The startup’s founders worked together at Prime Sense, which spawned depth-sensing technology that made its way into Microsoft’s Kinect and Apple’s iPhone X. Over their career, the team has worked a total of 20 DSPs.

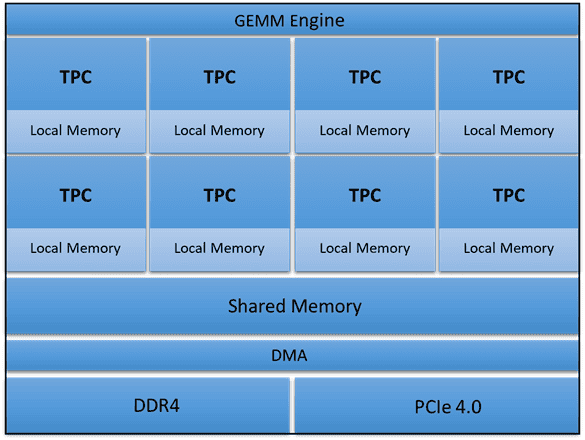

At the heart of Habana’s Goya inference chip are eight VLIW cores with a homegrown instruction set geared for deep learning and programmable in C. The startup claims that it has a library of 400 kernels that it and subcontractors created for inference tasks across all neural-network types. The chip supports a range of 8- to 32-bit floating-point and integer formats.

The Goya chip can process 15,000 ResNet-50 images/second with 1.3-ms latency at a batch size of 10 while running at 100 W. That compares to 2,657 images/second for an Nvidia V100 and 1,225 for a dual-socket Xeon 8180. At a batch size of one, Goya handles 8,500 ResNet-50 images/second with a 0.27-ms latency.

The results are due to a combination of elements, including the chip’s architecture, mixed-format quantization, a proprietary graph compiler, and software-based memory management, said David Dahan, chief executive and co-founder of Habana and a former COO at DSP Group and PrimeSense.

The company is not revealing the size of the chip’s multiple-accumulate unit, how much shared and local memory it contains, or its raw performance in operations/second. It did say that its 200-W TDP chip fits in a 42.5 x 42.5-mm package and rides an x16 Gen4 PCIe card with 4 GBytes of memory.

The company demonstrated Goya working with graphs from MxNet — favored by Amazon — and ONNX frameworks. It is developing TensorFlow support and ultimately aims to support all major AI software frameworks.

The startup is also not revealing how much funding it has taken to date or how much it seeks to raise in its current round. Its initial backers included Bessemer, Walden, and its chairman, Avigdor Willenz, the former chief executive of Annapurna, the chip startup that was acquired by Amazon.

Habana aims to sample in Q2 2019 its 16-nm Gaudi chip for training neural nets. It sports 2-Tbits/second interfaces to support scaling to clusters of thousands of devices, uses the same VLIW core, and is software-compatible with Goya.